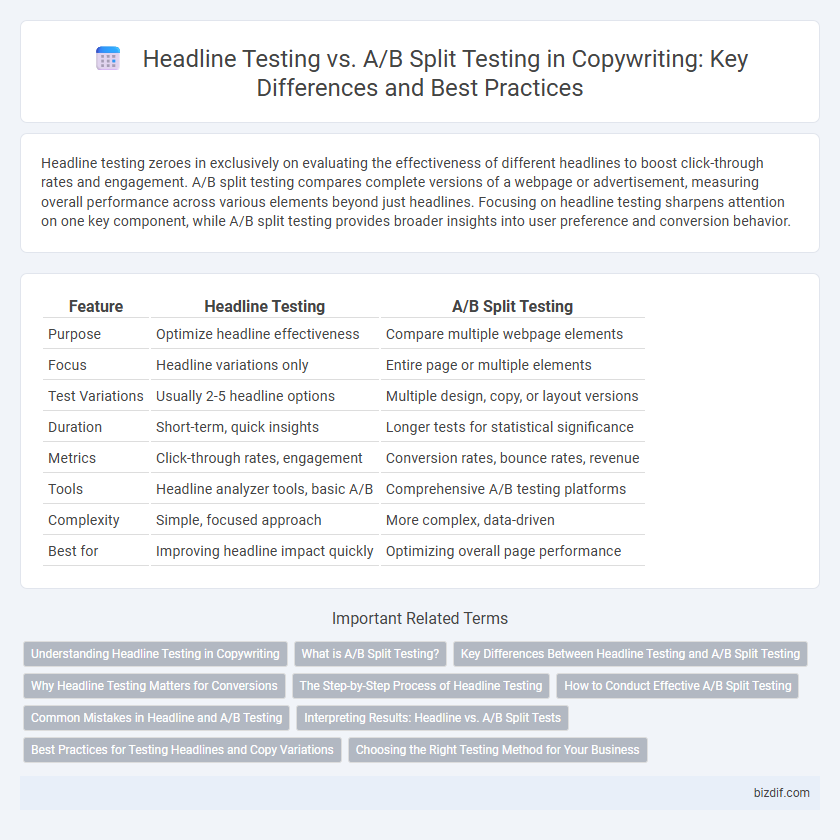

Headline testing zeroes in exclusively on evaluating the effectiveness of different headlines to boost click-through rates and engagement. A/B split testing compares complete versions of a webpage or advertisement, measuring overall performance across various elements beyond just headlines. Focusing on headline testing sharpens attention on one key component, while A/B split testing provides broader insights into user preference and conversion behavior.

Table of Comparison

| Feature | Headline Testing | A/B Split Testing |

|---|---|---|

| Purpose | Optimize headline effectiveness | Compare multiple webpage elements |

| Focus | Headline variations only | Entire page or multiple elements |

| Test Variations | Usually 2-5 headline options | Multiple design, copy, or layout versions |

| Duration | Short-term, quick insights | Longer tests for statistical significance |

| Metrics | Click-through rates, engagement | Conversion rates, bounce rates, revenue |

| Tools | Headline analyzer tools, basic A/B | Comprehensive A/B testing platforms |

| Complexity | Simple, focused approach | More complex, data-driven |

| Best for | Improving headline impact quickly | Optimizing overall page performance |

Understanding Headline Testing in Copywriting

Headline testing in copywriting centers on evaluating multiple headline variations to determine which one drives higher engagement and conversion rates. This method isolates headline performance, providing clear insights into which wording, length, or tone resonates best with the target audience. Effective headline testing enhances click-through rates and overall campaign success by optimizing the first impression within marketing content.

What is A/B Split Testing?

A/B split testing is a method of comparing two versions of a webpage or a marketing element--such as headlines, call-to-action buttons, or images--to determine which one performs better based on specific metrics like click-through rates or conversions. This controlled experiment divides traffic evenly between version A and version B to collect statistically significant data and optimize campaign effectiveness. By isolating variables, A/B split testing helps marketers make data-driven decisions that improve user engagement and overall ROI.

Key Differences Between Headline Testing and A/B Split Testing

Headline testing specifically evaluates variations of headlines to optimize click-through and engagement rates, while A/B split testing compares different versions of an entire webpage or email to measure overall performance. Headline testing isolates the headline as the primary variable, whereas A/B split testing involves multiple elements such as layout, images, and call-to-action buttons. The scope of headline testing is narrower with a focus on headline effectiveness, while A/B split testing provides broader insights into user behavior and conversion metrics across different design strategies.

Why Headline Testing Matters for Conversions

Headline testing directly targets the first point of user engagement by determining which headlines generate the highest click-through rates. Optimizing headlines can significantly boost conversion rates, as compelling headlines attract more qualified traffic and reduce bounce rates. Prioritizing headline variations enhances overall campaign effectiveness by aligning messaging with audience preferences.

The Step-by-Step Process of Headline Testing

Headline testing involves creating multiple headline variants to evaluate which one drives higher engagement metrics, such as click-through rates and conversions. The process starts with hypothesizing impactful headlines based on target audience insights, followed by publishing these variants in controlled environments where performance data is collected and analyzed. Metrics from these tests guide optimization by identifying the most compelling headlines to enhance overall campaign effectiveness.

How to Conduct Effective A/B Split Testing

Effective A/B split testing in copywriting requires creating two distinct headline variations to measure audience engagement and conversion rates accurately. Implement statistical significance thresholds and test one variable at a time to isolate the impact of headline changes on user behavior. Utilize tools like Google Optimize or Optimizely to collect real-time data and make data-driven decisions for headline optimization.

Common Mistakes in Headline and A/B Testing

Common mistakes in headline testing include neglecting to analyze click-through rates and failing to test variations that target different audience segments effectively. In A/B split testing, errors often arise from insufficient sample size and short test durations, leading to unreliable results and misguided decisions. Overlooking the significance of headline relevance and emotional appeal can undermine the overall performance of both testing methods.

Interpreting Results: Headline vs. A/B Split Tests

Interpreting results in headline testing centers on evaluating the emotional impact and click-through rates of different headlines to identify the most compelling message for the target audience. A/B split testing provides quantitative data by comparing variations of entire web pages or elements, such as headlines, images, and calls to action, to determine which version drives higher conversions. Understanding the nuances between headline-specific insights and broader A/B test results enables marketers to optimize content strategy with precision and improve overall campaign effectiveness.

Best Practices for Testing Headlines and Copy Variations

Effective headline testing involves isolating variables to accurately measure impact on click-through and conversion rates, ensuring each headline variation addresses target audience intent and emotional triggers. A/B split testing in copywriting requires consistent segment sizes and statistical significance to validate results, optimizing headlines based on real user engagement and behavioral data. Leveraging heatmaps and user feedback alongside quantitative metrics enhances insights for refining headline copy variations and driving higher performance.

Choosing the Right Testing Method for Your Business

Headline testing focuses specifically on evaluating different headlines to determine which captures audience attention and drives clicks, optimizing key elements like wording, length, and emotional appeal. A/B split testing compares two or more variations of entire web pages or marketing elements, providing broader insights into overall user engagement and conversion rates. Choosing the right testing method depends on your business goals, whether you need rapid headline optimization or comprehensive performance data across multiple variables.

Headline Testing vs A/B Split Testing Infographic

bizdif.com

bizdif.com