Human moderation in social media management for pet communities ensures nuanced understanding of context, emotional cues, and complex interactions, fostering genuine connections and safety. AI moderation offers swift content filtering and scalability, but may struggle with detecting subtle tone or sarcasm, potentially missing sensitive issues. Combining both methods optimizes accuracy and efficiency, balancing empathy with technology for effective pet-focused social media environments.

Table of Comparison

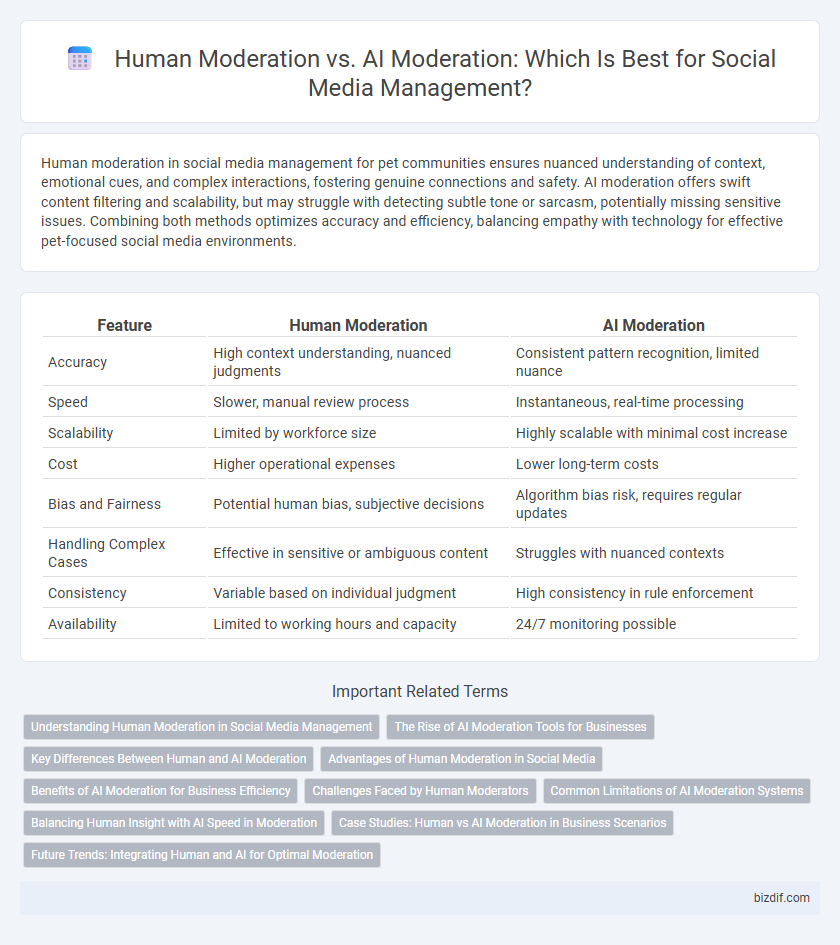

| Feature | Human Moderation | AI Moderation |

|---|---|---|

| Accuracy | High context understanding, nuanced judgments | Consistent pattern recognition, limited nuance |

| Speed | Slower, manual review process | Instantaneous, real-time processing |

| Scalability | Limited by workforce size | Highly scalable with minimal cost increase |

| Cost | Higher operational expenses | Lower long-term costs |

| Bias and Fairness | Potential human bias, subjective decisions | Algorithm bias risk, requires regular updates |

| Handling Complex Cases | Effective in sensitive or ambiguous content | Struggles with nuanced contexts |

| Consistency | Variable based on individual judgment | High consistency in rule enforcement |

| Availability | Limited to working hours and capacity | 24/7 monitoring possible |

Understanding Human Moderation in Social Media Management

Human moderation in social media management relies on trained professionals to evaluate and manage content based on context, cultural nuances, and emotional intelligence, ensuring community guidelines are upheld accurately. This approach excels in addressing complex cases such as hate speech, misinformation, and harassment that require subjective judgment and sensitivity. Human moderators provide a personalized touch that AI systems often struggle to replicate, enhancing trust and engagement within online communities.

The Rise of AI Moderation Tools for Businesses

AI moderation tools have rapidly transformed social media management by offering scalable, real-time content filtering and policy enforcement, reducing reliance on human moderators. Advanced machine learning algorithms analyze vast volumes of user-generated content, detecting hate speech, spam, and inappropriate imagery with increasing accuracy. Businesses benefit from AI's efficiency and cost-effectiveness, while still integrating human oversight to address complex or context-sensitive cases.

Key Differences Between Human and AI Moderation

Human moderation excels in understanding nuanced context, cultural sensitivities, and emotional undertones that AI algorithms often miss, providing personalized and adaptive decision-making. AI moderation processes vast amounts of content rapidly, ensuring real-time detection of spam, hate speech, and policy violations but may struggle with sarcasm, slang, or evolving language trends. Combining human intuition with AI scalability optimizes content review efficiency and accuracy in social media management.

Advantages of Human Moderation in Social Media

Human moderation in social media offers nuanced understanding of context, cultural sensitivity, and emotional intelligence that AI often lacks, ensuring more accurate content evaluation. Skilled moderators can interpret sarcasm, humor, and complex social cues, reducing false positives and negatives. This approach fosters authentic community engagement and builds trust by addressing unique situations with empathy and discretion.

Benefits of AI Moderation for Business Efficiency

AI moderation enhances business efficiency by automating the detection and removal of inappropriate content at scale, reducing the need for extensive human oversight. Advanced machine learning algorithms ensure faster response times and consistent enforcement of community guidelines, minimizing risks of human error or bias. Integrating AI moderation frees up human moderators to focus on complex cases, improving overall content quality and user experience.

Challenges Faced by Human Moderators

Human moderators face significant challenges including exposure to harmful content leading to mental health risks, inconsistent decision-making due to subjective judgment, and scalability issues in handling vast volumes of social media interactions. The emotional toll and high turnover rates further hinder the effectiveness of manual moderation efforts. These obstacles emphasize the need for balanced approaches combining human insight with automated tools in social media management.

Common Limitations of AI Moderation Systems

AI moderation systems often struggle with context nuances, sarcasm, and cultural sensitivities, leading to misinterpretation of user content. These tools face difficulties in detecting subtle hate speech, misinformation, or complex emotional tones without human judgment. Despite advances, AI lacks the empathy and adaptive understanding required for accurate, fair content moderation on diverse social platforms.

Balancing Human Insight with AI Speed in Moderation

Human moderation leverages nuanced understanding and emotional intelligence to interpret complex social contexts, while AI moderation provides rapid processing and scalability across vast volumes of content. Combining human insight with AI speed ensures more accurate identification of harmful or inappropriate materials, reducing false positives and negatives. This hybrid approach enhances community safety and engagement by addressing diverse moderation challenges efficiently.

Case Studies: Human vs AI Moderation in Business Scenarios

Case studies comparing human moderation and AI moderation in business scenarios reveal distinct advantages and challenges for each approach. Human moderators excel in nuanced understanding and context-sensitive decision-making, critical for handling complex or sensitive content, while AI moderation offers scalability and rapid processing of large data volumes, improving efficiency in managing high traffic on social platforms. Analyzing outcomes from companies like Facebook and YouTube highlights that combining human oversight with AI tools often produces the most effective content moderation strategy, balancing accuracy with operational speed.

Future Trends: Integrating Human and AI for Optimal Moderation

Future trends in social media management highlight the integration of human expertise and AI algorithms to achieve optimal content moderation. Combining AI's ability to process vast data quickly with human judgment ensures nuanced understanding of context, cultural sensitivity, and ethical considerations. This hybrid approach improves accuracy, reduces bias, and enhances the overall efficiency of moderating complex social interactions online.

Human moderation vs AI moderation Infographic

bizdif.com

bizdif.com